Ever had a long, insightful conversation with ChatGPT, only to find that it suddenly starts forgetting things, giving weird responses, or just gets incredibly slow? If you’ve been deep into a chat thread and noticed that the bot starts to hallucinate or make up information, you’re not alone.

This article explains why this happens, what’s going on behind the scenes, and how you can fix or work around it — all by understanding something called the context window.

What Is a Context Window?

Think of a context window as the short-term memory of a language model like ChatGPT, Gemini, Claude, or even local models like LLaMA or DeepSeek. These models, like humans, can remember a bunch of stuff — but only up to a point.

Imagine you’re catching up with a friend over coffee. In the first 15 minutes, you’re both sharp, remembering stories, jokes, and ideas. But keep that conversation going for hours, and it gets foggy. Important points might get lost, and you might even forget why the conversation started.

LLMs work the same way. The longer the chat, the harder it is for them to remember everything — unless you give them a larger “memory,” or in AI terms, a bigger context window.

Breaking Down the Context Window

1. Tokens: How AI Reads

AI doesn’t count words like humans do. It uses tokens. A token might be a whole word, part of a word, or even just a comma or space. For example, the sentence “This is a test.” might be just 4 words to us, but could be 6–8 tokens to a language model, depending on the tokenizer used.

You can check token counts using tools like OpenAI’s tokenizer.

2. Context Limitations

Let’s say you’re using a model with a 2048-token context window. That means it can only keep track of 2048 tokens worth of conversation — which includes:

- Your questions

- The model’s responses

- Any hidden system prompts

- Any documents or code you’ve fed into it

Once you cross that limit, the model starts to forget older information — even important things you mentioned earlier.

Real Example: Memory Loss in Action

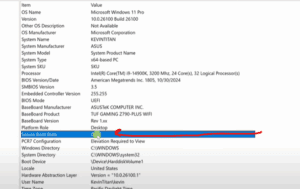

Using LM Studio, you might load a local model like Gemma 34B and set the context window to 2048 tokens. Say you begin by telling the model that you’re reading a book called How to Take Smart Notes. Initially, the model remembers.

But as you continue the conversation — asking for a story, then a sequel, then a prequel — the context fills up. By the time you’re past 2400 tokens, the model forgets that book title. It simply can’t keep up anymore.

Now increase the context window to 4096 tokens, reload the model, and suddenly it remembers the book again.

Increasing Context Windows: Pros and Cons

Modern language models now support massive context windows:

- GPT-4 Turbo: 128,000 tokens

- Claude 3.5: 200,000 tokens

- Gemini 1.5: Up to 1 million tokens

- LLaMA 4 Scout (Meta): 10 million tokens in local models

Sounds great, right?

Well, not so fast. Larger context windows require significantly more:

- VRAM (video memory, especially important if you’re running local models)

- Compute power

- Time to process each message

For example, running Gemma 34B at max context window on an NVIDIA RTX 4090 with 24 GB VRAM might cause lag or even freeze your system. The bigger the memory, the more pressure it puts on your hardware.

Cloud-based models, on the other hand, handle large contexts more gracefully since they run on powerful servers — but they can still slow down or lose focus in very long conversations.

Why Large Contexts Still Struggle

Even with bigger memory, LLMs may still:

- Forget things in the middle of the conversation

- Lose accuracy

- Take longer to respond

This phenomenon was explored in a research paper called “Lost in the Middle”, which showed that LLMs are very accurate with content at the start and end of a conversation — but tend to struggle with the middle, resulting in a U-shaped accuracy curve.

How LLMs Pay Attention (Self-Attention Mechanism)

LLMs use something called self-attention to determine which parts of your conversation are important.

Let’s say you ask:

“I want coffee, but caffeine makes me jittery. What should I get?”

The model evaluates each word for relevance:

- “coffee,” “caffeine,” “jittery” – high relevance

- “I,” “want,” “should” – lower relevance

This “attention scoring” happens every single time you say something. In long conversations, the math involved in these computations grows, consuming more memory and GPU resources — which is why the model might become slower or forgetful.

Pro Tip: Start a New Chat When Changing Topics

If you’re switching from talking about coffee to asking for help with coding, start a new chat. Keeping one massive chat for everything makes the model juggle too much unrelated context, leading to confusion and hallucinations.

Even Claude will sometimes prompt you with a message saying:

“Hey, you’ve been talking for a while — consider starting a new chat to keep things smooth.”

Bonus: Cleaner Content for Better Context

If you want to feed an article or webpage into your LLM, avoid just pasting it as-is. Instead, try using a tool like Gina.ai:

- Go to the webpage you want to convert.

- In the address bar, type

r.gina.ai/in front of the URL. - Press enter.

Gina will convert the entire page into clean markdown — a format that’s much easier for LLMs to process and understand.

Final Thoughts

Just like us, LLMs have limitations. They have short-term memory, can lose track in long conversations, and get overwhelmed when handling too much diverse information. But now that you understand how context windows work — and how to manage them — you’ll be able to get much better performance out of your AI chats.

Remember:

- Keep it short and focused.

- Upgrade context size if your hardware supports it.

- Start a new chat when switching topics.

- Use clean formatting tools like Gina.ai for better input.

And just like your favorite coffee chat — the better the setup, the better the conversation.

Tags: llm context window, language model memory, chatgpt slow response, token count, context management, lm studio, local ai models, gpt 4 turbo, gemini 1.5, llama 4, ai hallucinations, large language models, self attention mechanism, gina ai, twin gate, ai performance tips

Hashtags: #LLM #ChatGPT #ContextWindow #Tokens #AIChat #GinaAI #Gemini #Claude #LLaMA #GPT4 #AIMemory #AIPerformance #LocalModels #TechTips