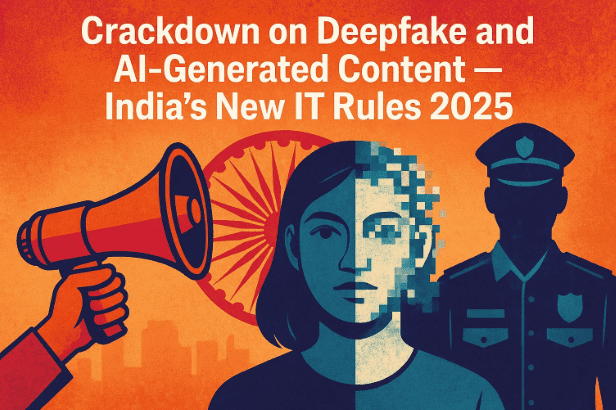

In recent years, the line between what’s real and what’s fake online has grown dangerously thin. A single manipulated video or voice clip can spark controversy, ruin reputations, or even influence elections. One such moment that brought this issue to the national spotlight was when actor Rashmika Mandanna’s deepfake video went viral. Though she spoke out bravely, her statement captured a growing fear — if it can happen to celebrities, it can happen to anyone.

India has now decided to take a strong stance. The government is preparing to enforce new IT Rules 2025 aimed squarely at deepfake videos, AI-generated images, and synthetic content circulating online. These amendments mark one of the most comprehensive efforts globally to combat AI misuse.

In this article, we’ll explore what these new IT Rules mean, what specific measures are being introduced, how they will affect content creators, platforms, and everyday users — and why this move matters for the future of AI ethics and online trust.

🧩 1. Background: How Deepfake Became a National Concern

Before diving into laws and definitions, it’s important to understand why the Indian government felt compelled to act.

In 2023, a disturbing deepfake video of actor Rashmika Mandanna surfaced online. It looked so real that millions believed it was genuine — until the actor herself clarified it wasn’t. She shared, “I feel really hurt to even have to talk about this deepfake video of me. It’s scary how technology is being misused. If I were still in school or college, I might not have survived this mentally.”

This wasn’t an isolated incident. Around the same time, several fake videos of politicians, journalists, and influencers also started circulating. These weren’t casual parodies — they were digitally fabricated pieces of misinformation designed to deceive.

Deepfakes are created using AI algorithms that analyze facial data, voice patterns, and movement to replace or modify real footage. When used responsibly, such technology can power education, films, accessibility tools, and even medical training simulations. But when used unethically, it can destroy reputations, spread fake news, or manipulate elections.

India’s rapidly expanding digital space, with over 850 million internet users, made it an easy target for such synthetic misinformation. This is why the government finally stepped in.

⚖️ 2. Why India Needed Stronger IT Rules

So far, India’s Information Technology Act (2000) and IT (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021 covered basic online content moderation. However, they weren’t built for a world where AI-generated voices and faces could perfectly mimic humans.

Misinformation campaigns, election manipulation, revenge porn, and fake celebrity endorsements using AI all increased dramatically by 2024. Law enforcement struggled to prosecute such cases because existing definitions didn’t classify deepfakes properly.

Therefore, the 2025 IT Rules Amendment aims to:

- Clearly define synthetic and AI-generated content.

- Make creators and platforms label or watermark AI content.

- Introduce accountability and timely takedowns.

- Protect individuals from non-consensual or harmful AI misuse.

In simple terms, the government wants to separate what’s “AI-made” from what’s “human-made” — a digital truth filter.

🏛️ 3. The 2025 IT Rules — An Overview

The amendment, officially titled “Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Amendment Rules, 2025”, is being introduced under Section 87 of the IT Act, 2000 by the Ministry of Electronics and Information Technology (MeitY).

A draft version was released for public comment, open until November 6, 2025, with enforcement likely starting November 15, 2025.

At its core, the amendment focuses on synthetic media — content created or modified using AI or algorithms that appears authentic but is not real.

Let’s now understand the main changes introduced.

🔍 4. Definition of Synthetic and AI-Generated Content

Every law begins with clear definitions — and this one does too.

The amendment introduces a new term: “synthetically generated information.”

According to the draft:

“Information that is artificially or algorithmically created, generated, modified, or altered using a computer resource in a manner that appears reasonably authentic and true.”

In simple words, if a photo, video, or audio is made using AI or digital manipulation that looks real but isn’t, it qualifies as synthetic content.

That includes:

- AI-generated voices and cloned speech.

- Deepfake videos replacing one person’s face with another.

- AI-enhanced photos that alter reality.

- Fake news or AI-written text presented as factual.

By defining this clearly, India’s IT Rules now bring deepfakes, AI-edited media, and cloned voices under legal scrutiny for the first time.

🪞 5. Transparency and Labelling Requirements

One of the most striking parts of the 2025 rules is mandatory transparency for AI-generated content.

If someone uploads an AI-generated video, they must clearly label it. The government has proposed strict formatting rules similar to the health warnings on cigarette packets.

Here’s what the rule requires:

- For Videos:

- At least 10% of the visible surface area must show a label such as

“This video has been generated using Artificial Intelligence.” - The text must remain visible throughout the video duration.

- At least 10% of the visible surface area must show a label such as

- For Audio Content:

- The first 10% of playback time must include a clear announcement stating the audio is AI-generated.

- Example: “This audio clip has been generated by artificial intelligence.”

- For Images and Text:

- Metadata must include the source, origin tool, and modification history so platforms and investigators can trace its authenticity.

This approach mirrors what some European and Chinese regulators have already adopted. It ensures users know exactly when they’re viewing AI-made content, not a real recording.

🧱 6. Due Diligence Rules for Platforms

Now that we’ve covered creators, let’s move to the platforms that host such content.

The amended rules add a new clause — Rule 3(3A) — which lays out special obligations for intermediaries (like social media sites) and creation platforms (like AI tools or editing software).

Key obligations include:

- AI creation tools (e.g., ChatGPT, Midjourney, Runway) must ensure transparency by embedding metadata and allowing users to label AI content easily.

- Social media platforms (e.g., Instagram, Facebook, YouTube) must enforce policies requiring users to label AI-generated content before posting.

- If unlawful synthetic content (such as defamation, impersonation, or election manipulation) appears, platforms must remove it within 36 hours of being flagged by the government or court order.

Essentially, both the creator and the host are now responsible for identifying and disclosing AI content — a shared accountability model.

🏛️ 7. Accountability and Oversight Mechanisms

Whenever the government introduces such broad powers, concerns arise about how they’ll be used. The 2025 amendment tries to balance control with transparency.

Key checks and balances include:

- Only senior officers — Joint Secretary level or above — in the central government can order takedowns.

- In states, only officers of DIG rank or equivalent can issue such directions.

- Every takedown order must specify the legal basis, the content ID, and the reason for removal.

- Blanket bans without justification are not permitted.

This framework ensures the government cannot arbitrarily remove content, preserving the right to free speech under Article 19 of the Indian Constitution.

🛡️ 8. Protection, Safe Harbour, and Penalties

Social media companies often fear legal liability for content their users upload. The amendment reaffirms the “safe harbour” principle, protecting platforms that act responsibly.

If an intermediary:

- Follows the due diligence rules, and

- Removes flagged unlawful content within the prescribed time,

then it remains protected from prosecution.

However, if it ignores government or court directives, or fails to mark AI-generated content transparently, the protection may be revoked — exposing it to legal penalties.

This creates an incentive for platforms to cooperate quickly while maintaining due process.

🌍 9. Global Context: How Other Countries Handle Deepfakes

India is not alone in facing this challenge. Around the world, governments are racing to regulate AI content.

| Country/Region | Regulation | Key Feature |

|---|---|---|

| European Union | AI Act & Digital Services Act | Mandatory risk assessment, transparency for AI-generated content |

| China | 2023 Deep Synthesis Regulation | All AI videos must include a visible watermark |

| United States | State-level AI laws (California, Texas, New York) | Deepfake election content banned within 90 days of voting |

| South Korea | AI Transparency Bill (in draft) | Platforms must label synthetic media |

| India (2025) | IT Rules Amendment | 10% surface warning, metadata traceability, 36-hour takedown |

India’s approach stands out because it directly combines content authenticity, accountability, and user transparency — not just one or two aspects.

⚙️ 10. Real-World Challenges and Implementation Hurdles

While the new rules sound comprehensive on paper, enforcement won’t be simple.

- Detection Technology: Identifying deepfakes automatically at scale is technically complex. AI detection models themselves can be fooled by newer generation techniques.

- Platform Compliance: Global platforms like Meta or YouTube will need to redesign their systems to comply with India’s specific labelling rules.

- Free Speech Balance: Over-regulation could discourage satire, art, or parody, which also use synthetic tools.

- User Awareness: Many ordinary users share AI content unknowingly. Educating them will be as crucial as punishing offenders.

Despite these challenges, this amendment sends a clear message — India will not tolerate AI misuse that harms public trust.

💡 11. Opportunities for Ethical AI Innovation

Interestingly, these laws aren’t meant to stop AI innovation. They’re meant to guide it responsibly.

Companies working with AI video, image, or speech synthesis can now:

- Develop ethical AI tools with built-in watermarking and detection.

- Help build deepfake detection frameworks for public agencies.

- Create educational and assistive content that remains transparent about its AI origins.

If implemented properly, India could become a global benchmark for AI regulation — balancing creativity with safety.

❓ 12. Frequently Asked Questions (FAQ)

Q1: Will making AI-based parody videos or memes become illegal?

Not necessarily. The new rules target deceptive or harmful synthetic media — not creative or humorous content — as long as it’s clearly labelled as AI-generated and doesn’t defame or impersonate anyone.

Q2: Who decides whether a deepfake is harmful?

Authorities can act based on complaints, court orders, or government notices. The order must come from a senior officer with a valid legal reason.

Q3: What if a creator doesn’t know their content qualifies as AI-generated?

Ignorance won’t be an excuse. Platforms are expected to build systems that help users self-label AI content before uploading.

Q4: How will these rules affect elections?

Election-related misinformation using AI deepfakes will face strict scrutiny. The Election Commission may coordinate with MeitY to ensure compliance during campaign periods.

Q5: Can individuals report deepfakes of themselves?

Yes. Victims can file complaints through cybercrime portals or directly to the platform hosting the content. Platforms must act within 36 hours once a valid request is made.

🧭 13. Conclusion

The IT Rules Amendment 2025 is arguably India’s strongest response yet to the dangers of synthetic media. By defining deepfakes, mandating clear labelling, ensuring traceability, and holding both creators and platforms accountable, the government aims to protect citizens from deception in the digital age.

Yet, as with all regulations, success will depend on implementation, transparency, and technological readiness.

If these rules are applied fairly — protecting citizens without stifling innovation — India could emerge as a global leader in ethical AI governance.

In a world where truth itself can be digitally faked, such a framework isn’t just policy — it’s protection for democracy, privacy, and digital dignity.

Disclaimer

This article is for informational purposes only and does not constitute legal advice. For official updates or legal interpretation, refer to the Ministry of Electronics and Information Technology (MeitY) website or the official Gazette notification when published.

#DeepfakeBan #AITools #DigitalSafety #IndiaTechLaw #ITRules2025 #OnlinePrivacy #AIEthics #CyberSecurity #DeepfakeAwareness